This article by Thinkful student Greg Araya was originally published on towardsdatascience.com.

Like so many others in these nutty apocalyptic-esque days, I spent my first few weeks alone in my small Chicago apartment living as a constantly tightening rubber band ball of stress, anxiety, and misery. I was concerned about the many gloomy possibilities that I perceived to now be swirling directly outside my door. Naturally, I quickly clung to some creature comforts that I thought may help, like eating enough junk food to keep a local restaurant in business for a month, playing Animal Crossing until my eyes were dancing, and pretending that the constant video calls with friends and family filled the void.

Another one of my coping mechanisms was quickly binging a show on Netflix that I’m sure you’ve heard of by now: Tiger King. Following this show was also the release of a meme that follows a series of memes from a writer that I appreciate, Keaton Patti. I’m referring to his famous “I made a bot watch 1000 hours of (popular show/movie)” series, which I categorize as top tier comedy. If you haven’t heard of them, definitely pop over to his twitter for a solid laugh. Anyways, in order to dig myself out of the rocky hole of quarantine drag (cue Alice in Chains), I decided to try and imitate this with some Python code and see how well I could churn out sentences and actions by the main characters.

It honestly turned out better than I expected, so I decided to share my code with the world so that maybe, just maybe, I can help someone else out of their rut, even if just for a moment. Below you’ll see my fun little function that uses Markov Chains (big thanks to the creators of Markovify) and labeled subtitles data from Tiger King to produce sentences that sound like the main characters of the show, along with some actions from the show in between. For more detail on how I went about this keep on scrolling below that, but for the impatient, just click “Run!” (Note: the first time you run it it’s a bit slow, but once everything is loaded up it runs pretty quickly. It also works/looks better on desktop rather than mobile.)

My Approach

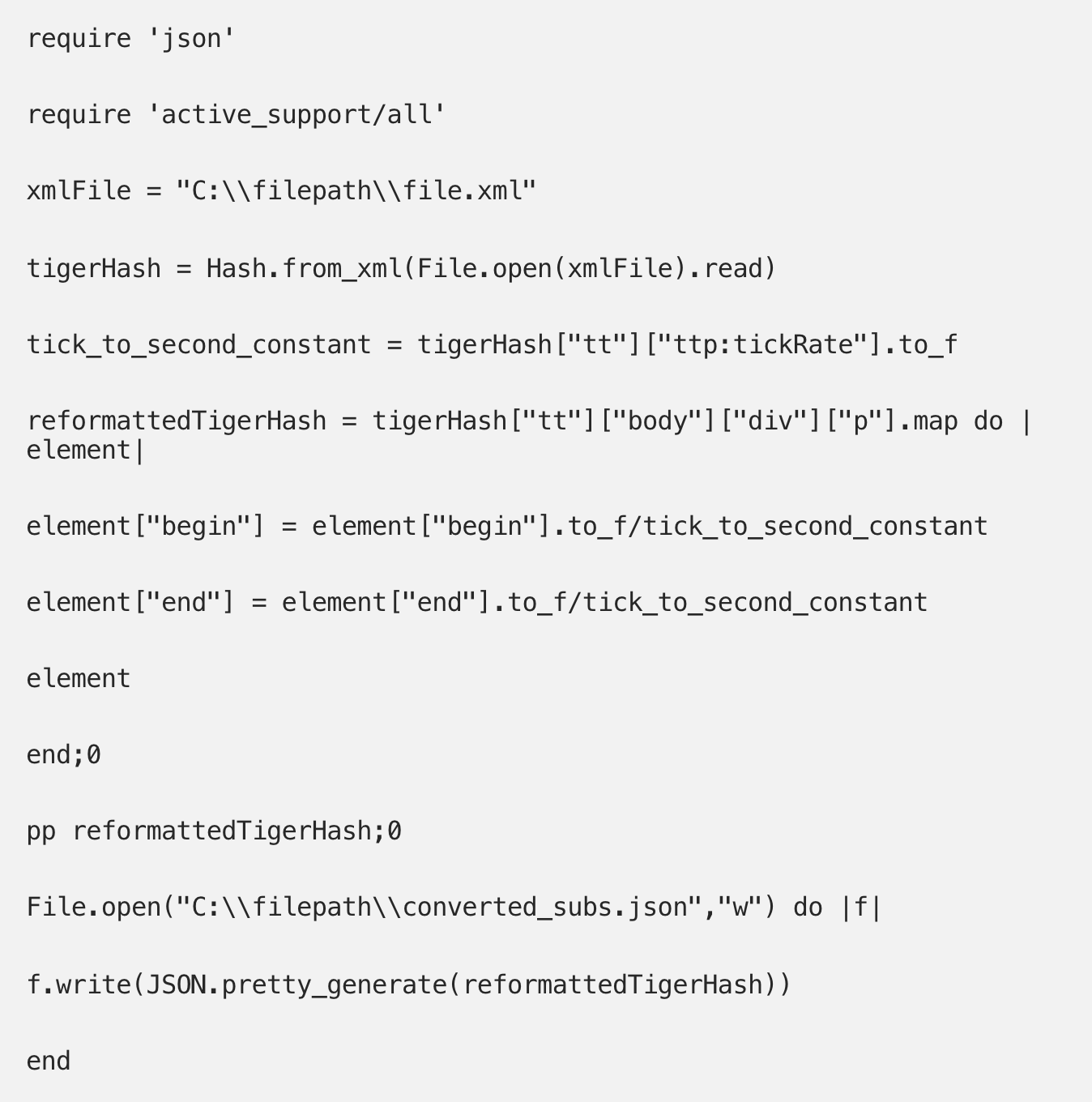

Before I could do any analysis, I needed to get data. Through a few minutes of google searching, I found out that you can get the subtitles from Netflix while watching the show, so I started there. Unfortunately when you receive the subtitle file, it’s in an XML format, so I had to convert that into JSON. I was happy to find that in the subtitles the quotes had timestamps attributed to them, but they were measured in “ticks” instead of something like minutes and seconds, so I also converted those while changing to JSON. I did this with a short Ruby code that I’ll display below.

I probably won’t bog this post down with too much of my code, because the cleaning process was pretty long, but the steps below should get you to a good starting place once you get the XML files from Netflix. I will be uploading code to my GitHub too so feel free to check that out (https://github.com/TheGregArayaSet).

Following this, I set about converting the JSON file into a Pandas dataframe in Python. Through some exploratory data analysis, I then realized that the subtitles didn’t always have the characters labeled. I thought about how to deal with this while I continued to get the Pandas dataframe into a usable form and eventually came up with another idea while conferring in a friend of mine (looking at you Taylor B). Amazon has a service called “AWS Transcribe,” which is geared to listen to medical professionals talking to each other and/or patients, write down what it thinks they’re saying word by word, and automatically label potential speakers.

I went back on Netflix, grabbed the audio of each episode using Audacity, created an AWS profile, and ran the audio through Amazon Transcribe to attempt to get labels for the characters that I could then merge back into the subtitle files from Netflix. Before trying yourself, I should let you know that Amazon Transcribe can only handle 10 speakers per episode, so like the subtitle files these too were not perfect. Their labeling does come with confidence estimates though so that’s helpful. Regardless, after waves of cleaning, hand washing, cursing, and gnashing of teeth, I was able to get a majority of the speakers labeled by comparing the speakers that were graciously labeled in the Netflix subtitles to the confident guesstimates from Amazon Transcribe.

With only a few spaces left open due to both low confidence from Amazon and a lack of labeling from Netflix, I was happy to peruse these lonely few and fill them in manually. After all, it was the perfect excuse to binge the whole show again. Like all good Data Science projects, the cleaning and combining took a large majority of my time. I didn’t include any of it in the above coding however because it took a while to run and I knew most people just want the instant gratification of clicking the run button for immediate meme potential. However, now that I had the data sorted out, the real fun math could begin.

Markov Chains Uncaged

In order to create sentences that actually made sense and weren’t just pushing out the most common words that each character used, I utilized a mathematical system known as Markov chains. If you don’t know what a Markov chain is, they are basically a system that describes transitions from one state to another. Working as a life/health actuary, we usually would use a Markov chain to determine how likely someone is going to become sick, get injured, recover from being sick or injured, or die given their current state (which could be any of those already). If we are looking at one person who is currently healthy, we assume that in the next month (or whatever period of time we’re looking at) they have some probability of transitioning into any of these states (still healthy, injured, sick, dead, etc).

However, what is really interesting about Markov chains is that they are memoryless. The way my actuarial friends and I put it “It’s not where you’re from it’s where you’re at.” In other words, the probabilities associated with transitioning from one state to another do not depend on your past states. That is, if you are sick right now, it doesn’t matter that you were healthy last month when determining what state you might be in next month. If someone is dead right now, it doesn’t matter if they were injured a month ago, their chance of changing to a different state is still zero because they are dead right now. If you’re still lost, and/or are more of a visual learner, I enjoy the videos by patrickJMT about this topic (https://youtu.be/uvYTGEZQTEs). So how does this apply to make sentences? Well, we can think of the “current state” as the current word (or words) that are being considered to choose the next word. If I told you that we’re looking at Joe Exotic’s text, and the sentence begins with the word “That”, I imagine there would be a myriad of choices for the next word.

We can then determine the percentage chance of each of these words by counting up how many times each unique word he says in the show following the word “that” (whether at the beginning of the sentence or not). Let’s imagine that the next word has a high probability of being vulgar, what do you think it would be? My mind goes right to “b**ch”, and then once we have that, looking to predict the next word my mind would ask, what does he usually say after “b**ch”? Well, I imagine there’s a high chance it’s “Carole,” for obvious reasons. You could choose to ignore the “that” from the beginning of the sentence while in the “b**ch” state, or you could choose to include it, but either way your current “state” determines the next word and not the words before it. The learned note to make here is that you don’t want your current state to include too many words, because then you just start printing exact sentences from your corpus, and that’s no fun.

Final Data Petting

While I was researching ways to make my process better, I stumbled upon a python package called Markovify (https://github.com/jsvine/markovify) that was already performing my task but in a much sleeker way. Installing it and running it over my data, I was elated at how well they worked together and immediately sent some friends a few of my first test runs. Following this, I exported the final cleaned data I had as CSV files so that people who wanted to run the code wouldn’t have to wait through the whole cleaning process and wrote up the code you see above. So there you have it, my full quest from quarantine zero to mathematical meme hero!

I hope you have enjoyed this project that I’ve cooked up, and please share it along with any of your friends who might get a kick out of it too! If you are interested in seeing some of my other projects feel free to check out my GitHub (https://github.com/TheGregArayaSet). I’m also open to some constructive criticism on how this project could be made better since I’m still improving my Python abilities.

Hot steak tips off the Walmart truck, for the people who made it this far:

- If you’re interested in limiting the speakers who are represented in the output, you can delete some from the “random_speakers” list on line 15.

- If you also want to choose how many sentences are output, you can edit the variable “num_sentences” on line 21.

- If you want to see longer or shorter sentences, you can set the “set_length” string variable to either “Long” or “Short.”

- Feel free to play around with it since repl.it (who is hosting the code) will give everyone the code as I have it on my end every time they load up this blog post.

- Again, as I said above, the first run is a little slow but every run after that will be rather quick.

This article was written by Thinkful student Greg Araya, originally published on towardsdatascience.com. Greg is currently studying data science.